Finally I completed AI Graduate Certificate program at Stanford University and obtained this pretigious certificate in artificial intelligence. It was quite intensive and difficult, and several times I considered giving up. My math skills were quite rusty, I didn’t have enough time taking into account my full-time work and family obligations, but in the end I’ve managed it!

I was thinking of taking this program already several years ago. Finally, since I wanted to focus specifically in my career on artificial intelligence, I decided to give it a go, as I knew from earlier that Stanford University offers excellent technical education especially in this domain. I had to complete 4 courses, each taking 10 intensive weeks with video lectures, reading homeworks, programming assignments, quizes and exams, some of them also a course project.

My first course was CS224N (NLP with Deep Learning), as I love the language and am very interested how machines can process it, or what some people say, “understand” it. It was really great learning theoretical concepts from Prof. Chrisopher Manning and doing practical exercises in Python and Pytorch on implementing language models, word embedding, transformers in use cases like classification, sentiment analysis, and translation.

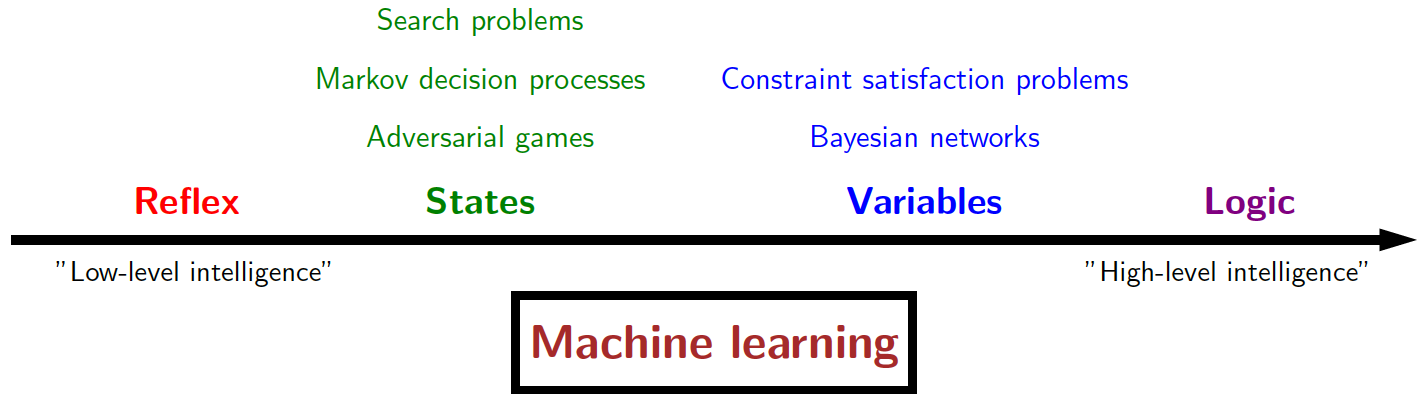

During Covid-19 lockdown I made a pause of three months, but then continued from September 2020 with the mandatory module CS221 (Principles and Techniques of Artificial Intelligence). This course provided the structure of the field in terms of approach in arriving to the solution, which can be based on statistical inference, dynamic programming, Markov Decision Process, game theory, Bayesian networks or logic.

My third course was CS234 (Reinforcement Learning). I believe this is the future of AI, especially in the intersection with other techniques like deep learning. Reinforcement learning uses an external feedback to find the optimal sequence of actions (or ‘policy’). It is the foundation of electronic games and one of the key elements enabling robotics. We can try first to create a model of our environment (model-based RL), or we can try to succeed in it without the model (model-free RL). The main techniques are policy and value iteration, Q-Learning, DQN, policy gradient, and new combined methods that include simulation-based search.

The fourth and last course was CS229 (Machine Learning). This is considered to be the most famous course in machine learning. I have learned how various probability distributions can be expressed with the exponential family representation, and how we can construct a generalized linear model out of them. Also, how a non-linear model can be transformed in a model, which is linear in the (non-linear features) instead of data and as such can be handled more easily with the existing algorithms. Then also the difference between discriminative and generative models that can be used for different purposes. We learned also about support vector machines and kernels, perceptrons and neural networks, and different unsupervised learning techniques. It was fun coding in numpy deep neural network for MNIST dataset and adding to it, as my course project, a quantum classifier.

I feel more competent now not only to implement different models, but also to evaluate them, to design them according to the problem at hand, and do the research of some more advanced concepts.

Sasha Lazarevic

July 2021